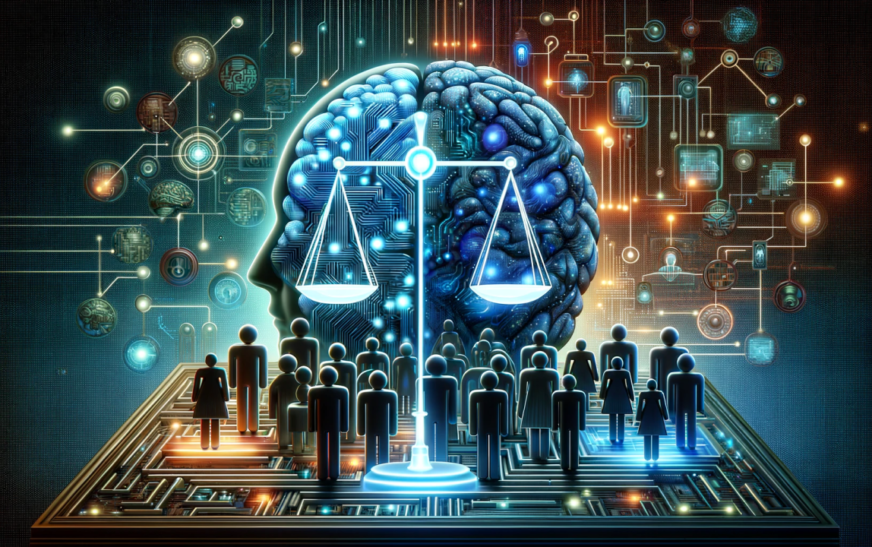

Introduction to AI Ethics

Artificial Intelligence is rapidly reshaping our world. From healthcare to finance, algorithms are making decisions that impact lives daily. But with great power comes great responsibility. As AI becomes more integrated into our society, the ethical implications of these technologies demand our attention.

One pressing concern in the realm of AI ethics is bias. Algorithms, designed to assist and enhance human decision-making, can inadvertently perpetuate prejudices present in their training data. This creates a ripple effect that influences everything from hiring practices to law enforcement.

So how do we ensure fairness in algorithms? Exploring this question unveils a complex landscape filled with challenges and opportunities for improvement. Let’s dive deeper into the intricacies of AI ethics as we navigate the road toward addressing bias and ensuring equitable outcomes for all users.

The Problem of Bias in Algorithms

Bias in algorithms is a critical issue that plagues the world of artificial intelligence. It stems from the data used to train these systems, often reflecting societal prejudices.

Bias in algorithms is a critical issue that plagues the world of artificial intelligence. It stems from the data used to train these systems, often reflecting societal prejudices.

When biased data enters an algorithm, it can perpetuate stereotypes and unfair treatment. For instance, facial recognition technology has been shown to misidentify individuals based on race or gender. This not only impacts personal lives but also raises serious ethical concerns.

Moreover, bias can seep into hiring practices when AI tools are employed to screen resumes. The repercussions affect job opportunities for countless qualified candidates simply because the algorithm learned from flawed historical data.

Addressing this problem requires us to scrutinize our datasets and question underlying assumptions in AI development. Transparency and accountability must become integral parts of our approach if we hope to create fairer technological solutions for everyone.

Examples of Bias in AI

AI systems have been under scrutiny for their inherent biases, which can lead to significant real-world consequences. One notorious example is facial recognition technology. Studies show that these algorithms often misidentify people of color at higher rates than white individuals, leading to wrongful accusations and privacy violations.

AI systems have been under scrutiny for their inherent biases, which can lead to significant real-world consequences. One notorious example is facial recognition technology. Studies show that these algorithms often misidentify people of color at higher rates than white individuals, leading to wrongful accusations and privacy violations.

Another area affected by bias is in hiring practices. Some AI tools used for recruiting have shown a tendency to favor male candidates over female ones. This reinforces existing disparities in the workplace and perpetuates gender inequality.

Additionally, predictive policing algorithms raise concerns about racial profiling. When data from previous arrests skew towards certain demographics, the system may unfairly target communities already burdened by systemic issues.

These examples illustrate just how pervasive bias can be within AI models, revealing an urgent need for transparency and accountability in algorithm development.

Impact of Biased Algorithms on Society

Biased algorithms can have far-reaching effects on society. They influence decision-making in critical areas such as hiring, lending, and law enforcement. When these systems operate with embedded biases, they perpetuate inequality.

For example, a recruitment algorithm might favor candidates from certain backgrounds while sidelining equally qualified individuals from underrepresented groups. This not only affects job seekers but also hinders workplace diversity.

In law enforcement, biased predictive policing tools may target specific communities unfairly. This creates a cycle of mistrust between authorities and citizens, exacerbating existing tensions.

Education is another realm where bias manifests. Admissions algorithms that overlook diverse experiences can deny students opportunities based solely on skewed data sets.

Such outcomes ripple through society, deepening divisions and hindering progress toward equity. Addressing these biases is crucial for fostering an inclusive future where technology works for everyone rather than against them.

Efforts to Address Bias and Fairness in AI

Various organizations and researchers are actively working to tackle bias in AI systems. One approach involves the development of diverse datasets. When training algorithms, it’s crucial that data represents all demographics fairly.

Another effort centers on algorithm audits. These evaluations help identify biases lurking within models before deployment. Transparency plays a significant role; many companies now publish their methodologies and findings to ensure accountability.

Collaborative frameworks have also emerged. Industry stakeholders, including startups and tech giants, join forces to share best practices for fairness in AI design.

Regulatory bodies are not standing idle either. New guidelines push companies toward ethical AI practices, ensuring compliance with standards that prioritize equity and justice.

Education is key as well. Workshops and resources aimed at developers raise awareness about potential pitfalls in creating biased algorithms while promoting an inclusive mindset from the ground up.

Ethical Guidelines for Developing and Implementing AI Systems

Establishing ethical guidelines is crucial for the development of AI systems. These guidelines should emphasize transparency. Users must understand how algorithms arrive at decisions.

Accountability also plays a significant role. Developers need to take responsibility for their creations. This fosters trust and ensures that AI technologies are used responsibly.

Inclusivity is another key aspect. Diverse teams can better identify potential biases in algorithms, leading to more equitable outcomes. It’s important that various perspectives shape AI development.

Regular audits and assessments help maintain fairness over time. Continual monitoring allows organizations to address any emerging biases promptly.

Prioritizing user privacy cannot be overlooked. Protecting personal data builds confidence among users and enhances the overall integrity of AI solutions.

Conclusion: The Future of AI Ethics

The landscape of AI ethics is rapidly evolving. As technology advances, the call for accountability and transparency grows louder. Addressing bias and fairness in algorithms is no longer optional; it’s a necessity.

Developers, companies, and policymakers must collaborate to ensure ethical standards are not just theoretical concepts but practical realities. The future will require continuous monitoring of AI systems to identify biases early, ensuring that these technologies serve everyone equitably.

As we move forward, education around AI ethics should be prioritized. It’s essential for those creating algorithms to understand the implications of their work on society at large. By promoting diversity within tech teams, we can foster a more inclusive approach to algorithm design.

Public awareness also plays a crucial role. An informed populace can hold organizations accountable for any discriminatory practices that arise from biased algorithms. Together, stakeholders across all sectors have the potential to shape an ethical framework that champions fairness in artificial intelligence.

The journey toward responsible AI development is ongoing yet promising—a commitment towards creating equitable solutions that respect human dignity while harnessing the incredible possibilities offered by technology.